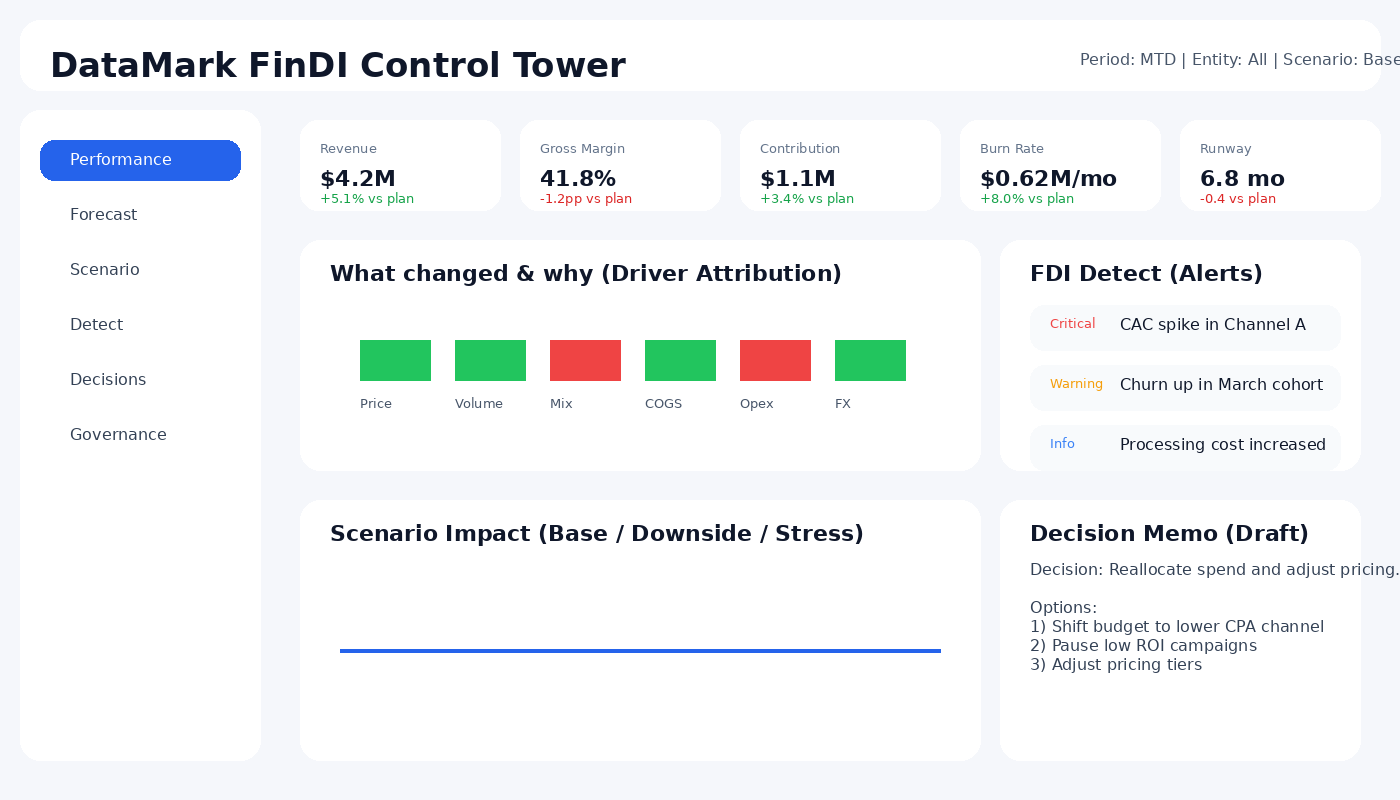

DataMark is a fast-growing analytics and AI company building high-performance data systems for financial institutions, banks, fintech platforms, and enterprises. We develop forecasting engines, risk analytics pipelines, and intelligent data products powered by scalable cloud and big-data architectures.

To support our expanding data platform and next-generation products, we are looking for a highly skilled Data Engineer to join our core engineering team.

Position: Data Engineer (Full-time)

Location: Ho Chi Minh City / Remote (Hybrid)

Department: Data Engineering & Infrastructure

Reports to: Lead Data Engineer / Head of Data Platform

Role Overview

As a Data Engineer at DataMark, you will design, build, and optimize data pipelines and data platforms that power our analytics and machine-learning products.

You will work with large-scale datasets (financial, macroeconomic, behavioral, transactional) and build robust, automated, cloud-native data systems that enable high-quality analysis and real-time intelligence.

This role is ideal for someone who enjoys solving complex data problems, thinks in systems, and builds scalable solutions.

Key Responsibilities

Data Pipeline Development

-

Design and develop batch and streaming ETL/ELT pipelines.

-

Build workflow orchestration using Airflow, Prefect, Dagster, or similar platforms.

-

Implement real-time ingestion and streaming systems using Kafka, Pub/Sub, or Kinesis.

Data Architecture & Warehousing

-

Develop and maintain data lakes and data warehouses using BigQuery, Snowflake, Redshift, or Databricks.

-

Implement efficient data models: star schema, wide tables, and lakehouse structures.

-

Optimize performance with partitioning, clustering, caching, and storage formats (Parquet/Delta).

Data Quality & Governance

-

Build monitoring tools for data accuracy, completeness, and freshness.

-

Implement metadata management, data lineage, and documentation standards.

-

Enforce security best practices: IAM roles, encryption, audit trails.

Collaboration & Integration

-

Partner with Data Scientists to deliver model-ready datasets.

-

Support Analytics teams through stable data marts and BI layers.

-

Collaborate with DevOps/SRE on CI/CD, containerization, deployment, and observability.

Performance Tuning

-

Diagnose and resolve performance bottlenecks in distributed processing systems.

-

Optimize compute and storage costs in cloud environments.

-

Improve reliability and scalability of mission-critical data services.

Required Qualifications

-

Bachelor’s degree in Computer Science, Data Engineering, Information Systems, or equivalent.

-

Strong proficiency in Python and SQL.

-

Experience with big-data frameworks like Apache Spark, Flink, or Beam.

-

Hands-on experience with cloud platforms (AWS, GCP, Azure) and data warehousing tools.

-

Understanding of data modeling, distributed systems, and scalable architectures.

-

Familiarity with Git, Docker, CI/CD workflows.

Preferred Qualifications

-

Experience with streaming systems (Kafka/Pub/Sub/Kinesis).

-

Experience with Databricks or cloud-native lakehouse architectures.

-

Background in financial data, risk systems, or analytics platforms.

-

Knowledge of data governance tools (Great Expectations, Monte Carlo, Soda).

-

Experience building production-grade, automated data pipelines.

What We Offer

-

Competitive salary + performance bonus.

-

Opportunity to build large-scale data infrastructure for high-impact financial AI products.

-

Modern tech stack: cloud data warehouses, Spark/Databricks, streaming systems, orchestration frameworks.

-

Clear career growth path toward Senior Data Engineer, Data Architect, or Platform Engineer.

-

Hybrid working model, flexible hours, engineering-driven culture.

-

Exposure to real-world financial, macroeconomic, and risk-intelligence datasets.

How to Apply

Please send your resume/CV + GitHub or relevant project links to:

contact@datamark.vn

Subject: Application – Data Engineer – [Your Name]